Back to: Machine Learning

0

Gradient Descent for Simple Linear Regression

Gradient Descent for Simple Linear Regression is an optimization technique used to find the best-fitting line by iteratively adjusting the slope (mm) and intercept (cc) to minimize the error between predicted and actual values.

Equation of Predicted Values

Mean Squared Error (MSE) Formula

Start with initial values for the parameters m(slope) and c (intercept). Iteratively adjust these parameters in the direction that minimizes the cost function, typically by using gradient descent to reduce the error between predicted and actual values.

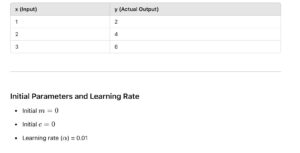

Example: Gradient Descent for Simple Linear Regression

Steps to Perform Gradient Descent (Manual Calculation for 3 Iterations)

Predicted Value Formula

Iteration-1

Iteration-2

Iteration-3

Summary After 3 Iterations

The slope (mm) and intercept (cc) are getting closer to their optimal values as the gradient descent algorithm iterates. This process can continue until the gradients become very small or meet a predefined threshold.