Back to: Neural Networks

What is a Gated Recurrent Unit (GRU)?

A Gated Recurrent Unit (GRU) is a type of neural network used for tasks involving sequences, like predicting the next word in a sentence or analyzing time series data. It helps the model “remember” important information from earlier in the sequence while “forgetting” less useful details.

Real-Time Applications of GRU (Gated Recurrent Unit)

GRUs are widely used in real-world scenarios involving sequential or time-dependent data. Here are some practical applications:

1. Time Series Forecasting

Use Case: Predicting stock prices, energy consumption, or weather.

- Example: A power company uses GRUs to predict energy demand based on past usage patterns, weather data, and time of day. This helps optimize power generation and reduce costs.

2. Natural Language Processing (NLP)

Use Case: Text generation, machine translation, and sentiment analysis.

- Example:

- GRUs power chatbots to generate human-like responses in real time.

- Translate phrases from one language to another (e.g., English to French) by learning the sequence of words.

3. Speech Recognition

Use Case: Converting spoken language into text.

- Example: GRUs are used in applications like Google Voice or Siri to process audio waveforms and predict corresponding words.

4. Real-Time Traffic Prediction

Use Case: Estimating traffic congestion on roads.

- Example: A navigation app like Google Maps uses GRUs to analyze traffic patterns from the past few hours and predict future congestion in real-time.

5. Financial Market Analysis

Use Case: Predicting stock trends or credit card fraud detection.

- Example:

- Predict stock price movement based on historical data.

- Detect anomalies in real-time credit card transactions to prevent fraud.

6. Anomaly Detection

Use Case: Identifying unusual patterns in time series data.

- Example: Monitoring server logs in a data center to detect and alert administrators of unusual system behavior, such as sudden CPU spikes.

7. Healthcare Monitoring

Use Case: Predicting patient health metrics.

- Example:

- Predicting heart rate or blood sugar levels for patients wearing IoT health devices.

- Early detection of diseases by analyzing sequences of diagnostic test results.

8. Recommendation Systems

Use Case: Providing personalized recommendations based on user behavior.

- Example:

- Streaming platforms like Netflix use GRUs to analyze viewing history and recommend shows/movies.

- E-commerce platforms like Amazon recommend products by analyzing browsing and purchase patterns.

9. IoT and Smart Systems

Use Case: Real-time predictions and optimizations in smart devices.

- Example:

- A smart thermostat predicts room temperature trends and adjusts settings for optimal comfort.

- GRUs in drones process sensor data to adjust flight paths dynamically.

10. Real-Time Translation

Use Case: Translating speech or text on the fly.

- Example: Google Translate uses GRUs to interpret sentences and translate them in real time, maintaining the sequence of words for grammatical accuracy.

Why GRUs are Preferred for Real-Time Applications?

- Computational Efficiency: GRUs are simpler than LSTMs, making them faster to train and infer.

- Memory Retention: GRUs capture long-term dependencies, crucial for sequential data.

- Dynamic Adaptability: They update predictions based on incoming data in real-time, ideal for dynamic environments.

How GRU Works: Components and Their Uses

A Gated Recurrent Unit (GRU) is a simplified type of recurrent neural network designed to capture patterns in sequential data. It consists of two main gates: Update Gate and Reset Gate, which control how information flows through the network.

Components of GRU

1. Update Gate

- What it does: Decides how much of the previous stock trends should be remembered and how much should be updated with new information.

- Why it matters: Stock prices often follow long-term trends. The update gate helps the GRU remember important past trends, like a stock’s steady rise or fall. It ensures that when the market has been behaving consistently (e.g., prices going up), the GRU doesn’t forget it just because of a short-term fluctuation.Example: If the stock has been rising steadily, the update gate will help the model keep that memory intact, so the model doesn’t predict a sudden drop without reason.

2. Reset Gate

- What it does: Decides how much of the past data should be forgotten to make room for new information.

- Why it matters: In stock price prediction, sometimes old data (like a past market event) may no longer be relevant for future predictions. The reset gate helps the model ignore irrelevant past events and focus on more recent trends.Example: If a stock had a sudden price spike due to an announcement two weeks ago, the reset gate will help the model forget that information if it’s no longer affecting current prices.

3. Candidate Hidden State

- What it does: Proposes new information that might be important for predicting the stock price, based on the current market situation.

- Why it matters: Stock prices are influenced by many factors like news, trends, and market events. The candidate hidden state gathers this new information and makes sure it’s added to the memory. It’s like looking at the current situation to decide what might happen next.Example: If the stock has been volatile recently, the candidate hidden state helps the model recognise this pattern and incorporate it into its prediction.

4. Final Hidden State

- What it does: Combines everything: the old trends (from Ht−1, the new data (from Xt), and the candidate memory , to make the final prediction about the stock’s future behavior.

- Why it matters: This is the final “memory” that will be used for making the stock price prediction. It balances both past trends and current data, helping the model understand long-term patterns while still adapting to recent changes.Example: The final hidden state might say, “The stock has been rising for the last month, but today’s news indicates a slight drop.” This is what guides the model’s prediction.

Mathematical Intuition of GRU (Gated Recurrent Unit)

GRU is a type of Recurrent Neural Network (RNN) designed to capture sequential dependencies and decide when to remember or forget information, making it more efficient than traditional RNNs. Let’s break down the components of GRU with their mathematical equations and intuition:

1. Reset Gate (rt)

The reset gate helps the GRU decide how much of the previous memory should be “forgotten” when updating the hidden state. It allows the model to reset the memory for the current time step if the input data is significantly different from the previous one.

2. Update Gate (zt)

The update gate controls how much of the previous memory should be carried forward, and how much should be updated with new information.

Overall GRU Mechanism

- Input: The GRU receives the current input and the previous hidden state .

- Reset Gate: Decides how much of the previous memory to forget .

- Update Gate: Decides how much of the previous memory to keep .

- Candidate Hidden State: Proposes a new memory based on the input and the modified previous memory .

- Final Hidden State: Combines the old and new memory to produce the final output.

Model Parameters:

- Input size (vocabulary size) = 5 (one-hot encoded words).

- Hidden size (GRU) = 3 (number of hidden units).

- Learning rate = 0.1.

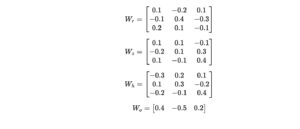

- Weight Matrices:

- Wr: Reset gate weight matrix (3×6)

- Wz: Update gate weight matrix (3×6)

- Wh: Candidate hidden state weight matrix (3×6)

- Wo: Output weight matrix (1×3)

Let’s assume initial random weight matrices as:

Forward Pass

Backward Pass and Weight Update:

The loss function is Binary Cross-Entropy and will be backpropagated to update the weights. We compute the gradient for each gate and weight matrix.

Gradients:

- Compute the gradient of the output with respect to the loss.

- Use the chain rule to propagate the gradients back through each gate and candidate hidden state.

For the reset gate, the gradient with respect to weights is: